Agglomerative Clustering in Python Using sklearn Module

Agglomerative clustering is a hierarchical clustering method of clustering data points into clusters based on their similarity. In this article, we will discuss how to implement Agglomerative Clustering in Python Using the sklearn module.

What Is Agglomerative Clustering?

It is a bottom-up approach hierarchical clustering approach, in which each data point is initially considered as a separate cluster and then merged with other clusters as the algorithm progresses. We merge the clusters based on a similarity measure, such as euclidean distance or cosine similarity.

The process of agglomerative clustering can be summarized as follows:

- Each data point is treated as a separate cluster.

- The algorithm iteratively merges the most similar clusters until all data points are in a single cluster or a predetermined number of clusters is reached.

- The resulting clusters are represented as a tree-like structure, known as a dendrogram.

Agglomerative clustering is a useful method for grouping data points into meaningful clusters, and it is often used in areas such as image processing, natural language processing, and customer segmentation. To learn the basics of agglomerative clustering, you can read this article on Agglomerative Clustering Numerical Example, Advantages, and Disadvantages.

Linkage Methods in Agglomerative Clustering

In agglomerative clustering, the process of merging clusters is done using a specific linkage method. There are several linkage methods that can be used in agglomerative clustering, each with its own characteristics and assumptions.

Some common linkage methods include:

- Single linkage: This method considers the minimum distance between two clusters as their similarity measure. It results in clusters that are closely connected but may be elongated and non-compact.

- Complete linkage: This method considers the maximum distance between two clusters as their similarity measure. It results in clusters that are compact but may be separated by a large distance.

- Average linkage: This method considers the average distance between all pairs of points in different clusters as their similarity measure. It tends to produce clusters that are more balanced than those produced by single or complete linkage.

- Ward’s method: This method is based on the minimization of the sum of squared errors within clusters. It tends to produce clusters that are more compact and balanced than those produced by other methods.

The choice of linkage method can significantly impact the resulting clusters in agglomerative clustering. It is important to carefully consider the characteristics of the data and the desired properties of the clusters when selecting a linkage method.

I have already discussed the single, complete, and average linkage methods in this article on how to plot a dendrogram in Python. In this article, we will use the ward’s linkage method for agglomerative clustering in Python using the sklearn module.

Plot Dendrogram in Agglomerative Clustering (Using Scipy Module)

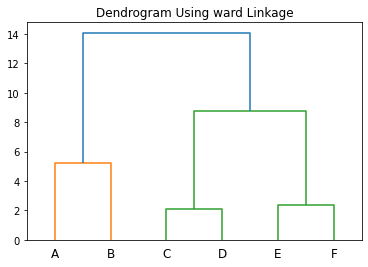

I have already discussed how to plot a dendrogram in Python. Here, we will use the exact steps discussed in the previous article. However, we will use the ward’s method to create the linkage matrix. For this, we will set the “method” parameter to “ward” in the linkage() function.

After creating the linkage matrix using the linkage() function, you can pass the linkage matrix to the dendrogram() function to create a dendrogram as shown in the following example.

import pandas as pd

from scipy.spatial import distance_matrix

from scipy.cluster.hierarchy import dendrogram, linkage

import matplotlib.pyplot as plt

data = [[1, 1], [2, 3], [3, 5],[4,5],[6,6],[7,5]]

points=["A","B","C","D","E","F"]

df = pd.DataFrame(data, columns=['xcord', 'ycord'],index=points)

ytdist=pd.DataFrame(distance_matrix(df.values, df.values), index=df.index, columns=df.index)

linkage_matrix = linkage(ytdist, "ward")

dendrogram(linkage_matrix, labels=["A", "B", "C","D","E","F"])

plt.title("Dendrogram Using ward Linkage")

plt.show()Output:

In the above output, you can observe that we have created a dendrogram using the ward’s linkage method in Python.

Create Clusters in Agglomerative Clustering Using the Sklearn Module in Python

To create clustering in agglomerative clustering using the sklearn module, we will use the AgglomerativeClustering class defined in the sklearn.cluster module. The AgglomerativeClustering() class constructor has the following syntax.

class sklearn.cluster.AgglomerativeClustering(n_clusters=2, *, metric=None, linkage='ward')Here,

- The

n_clustersparameter takes the number of clusters to be formed. By default, it is 2. - The

metricparameter takes as input the metric to use when calculating the distance between instances in a feature array. If the metric is a string or callable, it must be one of the options allowed by sklearn.metrics.pairwise_distances for its metric parameter. If linkage is“ward”, only“euclidean”is accepted in themetricparameter. - If the

metricparameter is set to“precomputed”, a distance matrix instead of a similarity matrix is needed as input for thefit()method while training the model. The“precomputed”method or a function is passed to the metric parameter while performing agglomerative clustering on datasets having categorical or mixed data types. By default, the metric parameter is set to None. In this case, euclidean distance is used as a metric. Before sklearn 1.4, you need to use the “affinity” parameter instead of the “metric” parameter. In sklearn version 1.2, the “affinity” parameter has been deprecated and the “metric” parameter is used in place of the affinity parameter after sklearn version 1.4. - The

linkageparameter is used to decide which linkage method to use. By default, it is set to“ward”.

To create Clusters in agglomerative clustering using the sklearn module in Python, we will first create an untrained agglomerative clustering model using the AgglomerativeClustering() method. Here, we will set n_clusters=2 to create two clusters from the dataset.

After obtaining the untrained model, we will invoke the fit() method on the untrained model to train it. The fit() method accepts the input dataset and returns a trained machine-learning model after execution.

Once we get the cluster labels, we can use the labels_ attribute of the model to find the cluster labels of all the data points in the clusters.

You can observe the entire process in the following example.

import pandas as pd

from sklearn.cluster import AgglomerativeClustering

data = [[1, 1], [2, 3], [3, 5],[4,5],[6,6],[7,5]]

points=["A","B","C","D","E","F"]

df = pd.DataFrame(data, columns=['xcord', 'ycord'],index=points)

model = AgglomerativeClustering(n_clusters=2, linkage='ward')

model.fit(df)

labels = model.labels_

print("The data points are:")

print(data)

print("The Cluster Labels are:")

print(labels)Output:

The data points are:

[[1, 1], [2, 3], [3, 5], [4, 5], [6, 6], [7, 5]]

The Cluster Labels are:

[1 1 0 0 0 0]In the above example, we have created two clusters from the given points. After observing the output labels, we can verify that clusters are in sync with the dendrograms.

Conclusion

In this article, we have discussed how to perform agglomerative clustering using the sklearn module in Python. We also discussed how to create a dendrogram using the ward’s linkage method.

To learn more about machine learning, you can read this article on regression in machine learning. You might also like this article on polynomial regression using sklearn in python.

I hope you enjoyed reading this article. Stay tuned for more informative articles.

Happy Learning!